[ad_1]

There is just so much of an artificial intelligence (more in conversations, less so in substance) vibe around us, I simply had to ask this. At what point does all this AI become a bit too much? For you, it may perhaps already has. My mind wandered towards this query as I sat through the engrossing Adobe MAX keynote, just a bit more than a week ago. A few days later, Canva (incidentally, they’re rivals too, to an extent) had their own AI push to talk about, one that builds on their successful addition of Leonardo.ai’s Phoenix models to build a new product. Are both companies risking a scenario where a typical skill set you’d expect a designer to have, may no longer be necessary between these powerful software tools and the desired end result. Any human, with some sense of aesthetics and design, could get the job done?

It may not be so simple. I asked Deepa Subramaniam, who is Vice President, Product Marketing, and Creative Professional at Adobe, whether so much AI that’s potentially available, changing the definition of creativity? She believes it should be a human’s call on that matter, the one who’s created, conceptualised or edited the piece. Whether they want to remove those pesky and eyesore electricity cables spoiling the frame of that gorgeous architecture you’ve just photographed, or not. Or to improve the texture and colour theme of the sky as you saw at the sunset, instead of how the phone’s camera decides to process it. AI shouldn’t be taking these calls, a human should.

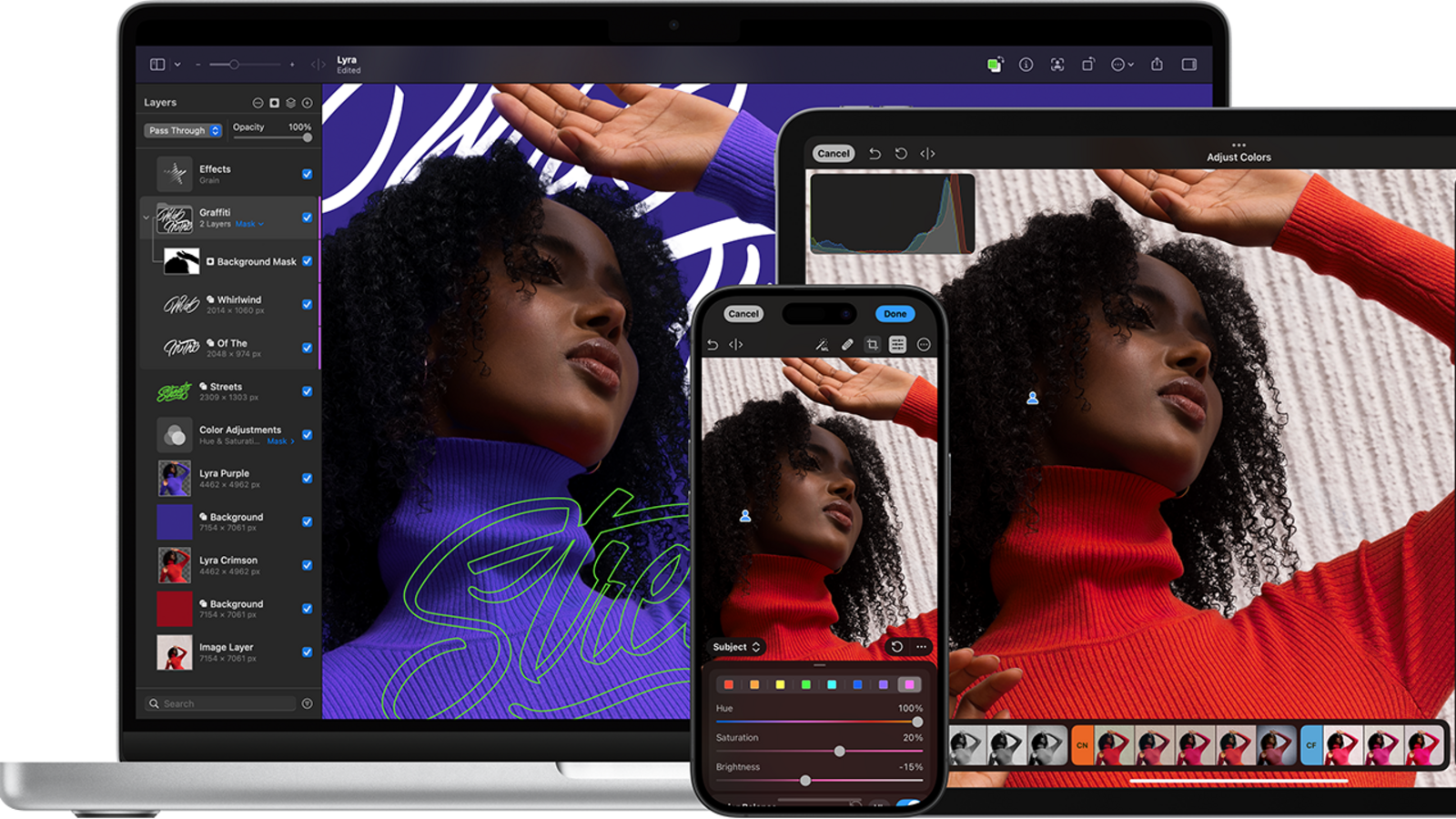

That may perhaps be the point. AI can and must simply remain a tool. With human oversight, when (and it mostly is, believe me) required. The use case for Adobe’s AI strengthened tools, Canva’s updates with newer and more powerful AI models at work, Pixelmator’s robust AI editing options, Otter’s AI transcripts for audio recording or even Google’s AI Overviews in Search, can have a human take corrective measures as and when needed. But do we?

Human intelligence still doesn’t seem to be identifying and correcting AI mistakes as often as it should

I was reminded of an article published in Nature earlier this year, which talked about how AI tools can often give its users a false impression that they understand a concept better than they actually do. One, willingly or out of a limited skill set and understanding, takes the other to walk down the same path blissfully. It is perplexing that while more and more humans are realising AI isn’t always right, human intelligence still doesn’t seem to be identifying and correcting those mistakes as often as it should.

Another interesting conversation this week was with Robert Kawalsky, Head of Product at Canva, on the sidelines of their next big updates. It is interesting that Canva’s been able to effectively assimilate [Leonardo.ai]Leonardo.ai’s Phoenix foundational model into their platform, less than three months on from acquiring the company, to create a new product called Dream Lab. “One of the amazing things we noticed with Canva is, as soon as you make technology accessible and easier to use, the number of ways in which people use it are surprising,” he tells me.

Execs talk about AI, creativity and workflows…

POWER

It is that time of the year again, when chip giants Qualcomm give us a first glimpse at what’ll power the next generation Android flagship smartphone. This year, things have really gotten serious, and the confidence drawn from the Snapdragon X Elite ushering in the AI PC era (Read our analysis), is showing. The next flagship Qualcomm chip is called the Snapdragon 8 Elite, and the change in this naming scheme understandably wants to draw parallels with the computing success — and of course the new neural processing unit. After all, the Snapdragon 8 Elite has the Apple A18 Pro’s competition to contend with. Does this match or surpass in real world usage, we’ll get to know in due course when the next line of Android flagships (expect those to start queueing up from early 2025) come around.

“Restoring leadership to the Android ecosystem”, is how Qualcomm describes the Snapdragon X Elite chip

You may not have immediately noticed this, but the Snapdragon 8 Elite may well be ushering in the end of the “efficiency” cores in flagship chips. This has a new architecture — two Prime cores and six Performance cores (the former at 4.32GHz and the latter at 3.53GHz). This is only really possible when Qualcomm is absolutely sure of the performance per watt metrics, and therefore battery life (their estimates suggest a 45% improvement in power efficiency, compared with the predecessor). “Restoring leadership to the Android ecosystem”, is how Qualcomm describes the 2nd gen Oryon CPUs performance, and they claim it surpasses the A18 Pro in their benchmark testing. In parallel, claimed performance gains that are more than 60% in certain use cases with select apps. Quite how big a leap your next Android flagship potentially can be, is worth contemplating. The software needs to keep up.

BIOMETRICS

Three years ago, Meta has shuttered Facebook’s facial recognition system after facing an inevitable privacy backlash from the masses. Now, the company says they’ll bring facial recognition back to its apps, in an attempt to weave that as a method to fight online scams and help users get back access to accounts that may have been compromised. The first implementation will be to weed out advertising with intentions to scam, such as those who may be using faces of celebrities. Subsequently, imposter accounts that try to dupe people into downloading malware, the lure of free giveaways, sending money and so on.

“We’re now testing video selfies as a means for people to verify their identity and regain access to compromised accounts. The user will upload a video selfie and we’ll use facial recognition technology to compare the selfie to the profile pictures on the account they’re trying to access,” the company details how account access will be regained, in case something goes wrong.

As soon as someone uploads a video selfie, it will be encrypted and stored securely, says Meta

SUBSCRIBE

It is time to put the prepaid and postpaid mobile tariff hikes into perspective, now that we’ve had some runway since they were implemented to get a better sense of the trend. I had said it then (you may read my take from that week in July) we’ll begin to see a drop in active mobile connections in subsequent months. The first to be discarded will be secondary or even third active SIMs, to keep expenses in check. More often than not, these are prepaid SIMs, either for voice or data (an increasing number of tablets too have 4G/5G). That is happening. Official data for the end of the month of July, which means around 4 weeks of data since the tariff hikes were implemented for prepaid recharges as well as postpaid mobile bills, doesn’t make for pretty reading. Jio lost 0.7 million users, Airtel lost the most with 1.69 million connections not recharging or closing postpaid lines, while an already cash-strapped and struggling Vi dropped 1.41 million users. Vi’s case is most perplexing here — they decided to join Jio and Airtel in tariff hikes, whilst already at a significant network quality disadvantage, and no 5G either to at least make a case for some value proposition. Give it another month, this scenario will develop further.

[ad_2]

Source link

Leave a Reply